In this series of articles we’ll follow along with an upgrade from a relatively complex customized 7.1 installation of IBM WebSphere Operational Decision Manager to IBM Operational Decision Manager (ODM) 8.5.

ODM 7.5, 8 and 8.5 bring us a number of valuable features and capabilities:

Highlights:

- Enhanced business user experience with new change management, governance capabilities & business console

- Branching and merging of branches

- Business focused simplified collaborative environment

o Governance framework for managing releases and multiple referenced rules sets (services)

- The ability to execute business rules from mobile applications

- Events capabilities, the addition of Complex Event Processing CEP capabilities to ODM

- Business ability to test rules with complex results using excel scenarios

- XXX Mainframe

- Operational enhancements

- Ability to decouple the management console from the execution environment and manage embedded rules remotely.

- Enhanced automatic generation of decision point web services HTDS

For reference on features:

What’s new in version IBM ODM 8

What’s new in version IBM ODM 8.5

The above new capabilities and potential end of life for 7.1 are usually drivers to upgrade to a later version.

In this article we’ll look specifically at migrating Decision Center formally known as rule team server, in later articles in this series we’ll look at the rules migration, testing, migration of customized decision validation services, decision server and more.

Migration of Decision Center Data (fka RTS)

Decision Center stores its information in a database, from version to version there will be changes made to the database structure and IBM provides scripts to assist with migrating information to the latest versions. The first issue we ran into was that the ANT migration documentation provided was confusing. Initially we thought that the script needs a running 7.1 decision center server and to be pointed to an 8.5 database. We edited the properties file in the bin folder called teamserveer-anttaks.properties and add the OldDatabaseSchemaName which is the 7.1. schema and an output file location where you would like the SQL to be generated. Datasource name for 7.1 and server URL and login credentials.

The migration script gives a number of options to migrate various aspects, for the schema we’ll use gen-migration71-script option to the ant command.

DC Database migration Issue 1: Documentation

The following exception is thrown when we try to run the conversion:

[gen-migration71-script] ilog.rules.teamserver.model.IlrConnectException: Could not deserialize result from HTTP invoker remote service [http://localhost:8080/teamserver/

remoting/session]; nested exception is java.io.InvalidClassException: ilog.rules.teamserver.model.IlrSessionContext; local class incompatible: stream classdesc serialVers

ionUID = 4729857957054555657, local class serialVersionUID = 8906827035412667122

Solution:

After some experimentation and chatting to IBM, we figured that the old schema has to be on the same database server as what the 8.5 DB server is using and that the ant script should be pointed at the 8.5 decision center server and not the 7.1 RTS.

DC Database migration Issue 2: Inconsistent Data

There were two issues missing data and duplicate names that now need to be unique in the 8.5. version. We are not sure of the reasons why data was missing, but the script filed on missing data in the artifact tables where information existed in version tables for some rules version as part of a baseline. The report names for DVS simulations where the same and needed to be made unique because of a new table constraint.

Solution:

The solution was to delete the versions of the rules that were missing from the artifact table because the baseline was in recycle bin.

delete from baselinecontent where version=item.ID;

delete from version where id=item.ID;

and

Update SCENARIOSUITEREPORT set NAME=item.NAME

Issue 2: Secure Team Server (HTTPS)

If you are attempting to connect to a secure SSL enabled server then you may get an exception. SSLHandshakeException

Solution:

In order to get around this exception you’ll need to download and then import the certificates. To download the certificates brows the home page of decision center and click view certificates in the browser and download a .cer file. The next step is to import the certificate to your local JRE trust sore

e.g.

keytool -import -keystore "C:\Program Files\Java\jre7\lib\security\cacerts" -file C:\Users\abcd\Desktop\ RTS_Certficate.cer

Customized Branding

The first port to 8.5 was the branding customization. This is generally used in a Multi-tenants scenario. A custom skin was added in the skin-faces-config.xml under the web-inf folder with the CSS and properties copied to the skin and customized. Additional tabs where also added as part of the customization where a file tabs.jsp was added to the custom folder.

Issue 1: CSS & Properties Merge

The custom Message.properties can’t be ported directly as some new keys are added to the 8.5 version like with CSS changes a file diff and edit will need to be done.

Solution:

The best resolution was to compare the properties & CSS file from 7.1. to the custom properties and pull the differences out which can be applied to version 8.5 new skin files directly.

We have to do a file diff on the differences between the CSS files.

Issue 2: JSF version changes

The have changed the underlying JSF 1.1 to myfaces 1.1.5

Solution:

Add the following liens of code to your faces-config.xml in the web-inf folder of teamserver war.

<renderer>

<component-family>javax.faces.Command</component-family>

<renderer-type>javax.faces.Button</renderer-type>

<renderer-class>org.apache.myfaces.renderkit.html.jsf.ExtendedHtmlButtonRenderer</renderer-class>

</renderer>

<renderer>

<component-family>javax.faces.Command</component-family>

<renderer-type>javax.faces.Link</renderer-type>

<renderer-class>org.apache.myfaces.renderkit.html.jsf.ExtendedHtmlLinkRenderer</renderer-class>

</renderer>

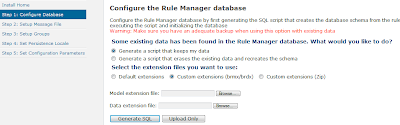

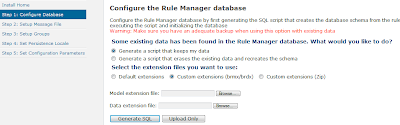

Extension Models

If you have any custom extensions model, make sure that you upload the .brdx and .brdm files on the Decision Center Installation Wizard screen.

API Changes

We encountered a number of changes in the API’s, this is not an exhaustive list but the ones that we ran into as part of an upgrade/migration.

Migration of Decision Center from 7.1 versions to 8.5 requires updating the APIs as well as any customized skin/branding being used.

API and Method Changes

- API changed from ilog.rules.teamserver.web.servlets.IlrDownloadServlet to ilog.rules.teamserver.web.servlets.IlrTestingDownloadServlet.

- IlrApplicationException needs to be handled if you are calling IlrDefaultSessionController.onCommitElement.

- IlrApplicationException needs to be handled if you are calling IlrDefaultSessionController.elementCommitted.

- API changed from ilog.rules.teamserver.web.servlets.IlrDownloadUtil to ilog.rules.teamserver.web.servlets.IlrDownloalUtil.

- IlrWUtils.getTimeZone() now returns an object of com.ibm.icu.timezone.

The next parts of this article will cover decision server, decision validation services customizations and other migration items.

To learn more about Prolifics, visit

www.prolifics.com.

Ryan Trollip is Prolifics’ Decision Management Practice Director. Ryan is an experienced solutions architect and implementation lead with a strong background in business driven and improvement focused solutions with an emphasis on Decision Management. Ryan has a proven track record of delivering successful projects, with over 15 years of experience covering project management, enterprise architecture, account management, and design/programming in a decision management context. Prior to joining Prolifics, Ryan was an Architect and Technical Account Manager, independently and for IBM/ILOG, focused on leading the delivering complex decision management projects.

Ryan Trollip is Prolifics’ Decision Management Practice Director. Ryan is an experienced solutions architect and implementation lead with a strong background in business driven and improvement focused solutions with an emphasis on Decision Management. Ryan has a proven track record of delivering successful projects, with over 15 years of experience covering project management, enterprise architecture, account management, and design/programming in a decision management context. Prior to joining Prolifics, Ryan was an Architect and Technical Account Manager, independently and for IBM/ILOG, focused on leading the delivering complex decision management projects.

Amrinder Singh Brar is a Technical Lead for Decision Management at Prolifics. Amrinder has 8 years of experience in software development with 4+ years of extensive experience in ILOG/BRMS world. His key expertise lies in implementing Decision Management solutions in Banking, Telecom, HealthCare and Travel domains. Prior to joining Prolifics, Amrinder worked as a Solution Consultant for various ILOG migration and implementation projects with Telecom and IT majors.

+(600x640)+(281x300)+(103x110).jpg)