Tuesday, September 29, 2009

IBM CloudBurst Part 5: IBM CloudBurst Offering in Comparison to other Cloud Offerings

In the previous blog entries we discussed the IBM offering for establishing private clouds i.e. the CloudBurst device and its configurations. In this final part of the blog series, I will discuss the other cloud computing options, specifically open source and how they compare to the IBM offering.

Besides IBM, the other big players in the cloud computing domain are Amazon, Sun (Oracle), Google, SalesForce, etc. The Amazon EC2 (elastic compute cloud) offers a very flexible cloud computing solution for public clouds; offering both open source as well as vendor specific technologies. Before we start the comparison of the offerings; let’s revisit the architectural service layers of cloud computing. The architectural services model of cloud computing can be viewed as a set of 3 layers viz. applications, services and infrastructure.

Layer 1 – SAAS (Software as a service) e.g. Sales Force CRM application

Layer 2 – PAAS (Platform as a service) e.g. XEN image offered by Amazon

Layer 3 – IAAS (Infrastructure as a service) e.g. e.g. Amazon EC2 and S3 services

The IBM CloudBurst device basically offers all 3 layers of cloud computing in a single device for establishing a private cloud. As far as public cloud is concerned, IBM offers several SAAS services e.g. IBM Lotus Live Notes, Events, Meetings, and Lotus Connections etc.

So how does an open source cloud computing model look like? At the platform layer level, companies like Sun Microsystems are offering solutions built around open source Apache, MySQL, PHP/Perl/Python (AMP) stack. Open source communities are very actively developing solutions catered for cloud computing, in fact, cloud computing is acting as a catalyst for the development of agile new open source products like lighttpd (an open source web server), Hadoop, the free Java software framework that supports data-intensive distributed applications; and MogileFS, a file system that enables horizontal scaling of storage. However, a cloud computing solution based on open source is yet to be adopted by early adapters of cloud computing. As Tim O’Reilly, CEO of O’Reilly Media, and others have pointed out, open source is predicated on software licenses, which in turn are predicated on software distribution — and in cloud computing, software is not distributed; it’s delivered as a service over the Web. So cloud computing infrastructures and the modifications to the open-source technologies that enable them tend not to be available outside the cloud vendors’ datacenters, potentially locking their users in to a specific infrastructure.

Although the software stacks that run on top of these cloud computing infrastructures could be predominantly open source, the APIs used to control them (such as those that enable applications to provision new server instances) are not entirely open, further limiting developer choice. And cloud computing platforms that offer developers higher-level abstractions such as identity, databases, and messaging, as well as automatic scaling capabilities (often referred to as “platform as a service”), are the most likely to lock their customers in. Without open interfaces linking the variety of clouds that will exist — public, private, and hybrid — practical use cases will be difficult or impossible to deliver with open source technologies.

IBM’s offering for setting up private clouds, though completely vendor based, does offer a one stop solution which is scalable and dynamic.

Samuel Sharaf is a Solution Director at Prolifics on the West coast with real world customer expertise with Portal implementations, Dashboard, Forms and Content Management. Sam also has expertise with migrating applications from non-IBM platforms to IBM WebSphere Application and Portal Servers.

Monday, September 21, 2009

Integrating WSRR (WebSphere Registry and Repository) and RAM (Rational Asset Manager)

One of the challenges we are facing as part of a current project that we are working on is to demonstrate the integration of WSRR (WebSphere Registry and Repository) and RAM (Rational Asset Manager).

First and foremost there are a lot of questions on the roles and responsibilities of both these products.

- Do we need both products?

- If so, what do we use RAM for and what do we use WSRR for?

- What is an asset?

- Is a service an asset? Can there be other assets other than services? Does that mean only service assets go into WSRR?

- What is a service? Is it just a WSDL? What about the service code? Should that also go into WSRR?

- How do these products work with one another?

- When I make a change in RAM does it reflect in WSRR and vice versa?

There are more questions than answers. I don't have answers to all these questions and as we work on this project, I am sure we will learn more. The team will post more answers here on this BLOG.

Today we took the first step. How can we get WSRR and RAM connected? I should tell you to my surprise I found that it was not difficult to accomplish this.

We had a RAM instance (v7.1) running on a VM Image.

I installed WSRR v6.2 on the same image and since it was for a POC, I used the Derby DB for WSRR. (I had installed WSRR with a DB2 backend and it had worked fine.)

There were just 2 things I needed to do to get the connectivity working

- RAM runs on a WAS instance. WSRR was running on a separate WAS instance. The communication between the two was over HTTPS. So I had to ensure that WSRR server certs were part of the trust store of the WAS server running RAM. Nothing RAM / WSRR specific here, pure WebSphere stuff.

- Log into the RAM WAS console using the following URL (https://localhost:13043/ibm/console/logon.jsp)

- Go to Security -> SSL certificate and key management > Trust managers > Key stores and certificates > NodeDefaultTrustStore > Signer certificates

- Do Retrieve from Port, Provide the following values: Host -> localhost, Port 9443, Alias WSRR

- Log out

We needed to set the configuration in the RAM Admin Console to connect to WSRR.

- Log into the RAM UI as admin

- Go to the admin tab, click on 'Community Name'

- Go to Connections

- Add a WSRR Connection

- Call in LOCAL WSRR

- The URL to provide is https://localhost:9443/

- User id and password is admin / admin

- Do 'Test Connection' and that should be successful

- Click on Synchronize and you should see the message -> WSRR-Asset Manager synchronization started

That’s it. Now I was able to search in RAM and have visibility into assets that were in WSRR. This was a simple test. These were stand alone instances . I assume when we do have clustered configurations we may have some challenges but it was a pleasant surprise to see something work the first time you try it.

Rajiv Ramachandran first joined Prolifics as a Consultant, and is currently the Practice Director for Enterprise Integration. He has 11 years experience in the IT field — 3 of those years at IBM working as a developer at its Object Technology Group and its Component Technology Competency Center in Bangalore. He was then an Architect implementing IBM WebSphere Solutions at Fireman’s Fund Insurance. Currently, he specializes in SOA and IBM’s SOA-related technologies and products. An author at the IBM developerWorks community, Rajiv has been a presenter at IMPACT and IBM's WebSphere Services Technical Conference.

Monday, September 14, 2009

Wading Through Requirements on SOA/BPM/BAM Projects!

On a recent project I was heavily involved in the requirements phase of SOA/BPM/BAM project. Requirements elicitation in its own right can be a challenge. On large projects with large teams and un-clear methodologies, approaches and tools/technologies, the issue is exacerbated.

Now throw in this whole BAM concept and things really get confusing. What I found is that BAM requires a customized approach for requirements elicitation. BAM requirements are all about the performance metrics of the business. But it is very easy for the Business Analysts and Business Owners to confuse the high level and low level requirements with design. It seems this is more of an issue with BAM due to the nature of the requirements. However, the Business Analysts and Business Owners need to focus on the scope of the requirements and the dimensions, NOT the design. When the business side elaborates on how they want to measure their business, there is a delicate balance between the calculations used to create the metrics and KPIs (Key Performance Indicators) themselves and the calculations, measures and metrics used to fulfill the requirement. Many times the business side not only gives you a description of the KPIs that they want to track, they also start to tell you where to gather data and how to calculate. This often imposes restrictions on the design that is the responsibility of the S/A and Designer.

In the end, we found that we had created a specialized approach for this project. We incorporated some specific BAM Specification and Design documents to help everyone understand the process and supply the necessary information for the BAM design team.

Some Definitions:

BAM (Business Activity Monitoring) - Gives insight into the performance and operation of your business processes real-time.

KPIs (Key Performance Indicators) - Significant measurements used to track performance against business objectives.

Monitor Dimensions - Data categories that are used to organize and select instances for reporting and analysis.

Requirements Dimensional Decomposition – The narrowing of requirements to the point of being clear, concise and unambiguous.

Metric - A holder for information, usually a business performance measurement. A metric can be used to define the calculation for a KPI, which measures performance against a business objective.

Jonathan Machules first joined Prolifics as a Consultant, and is currently a Technology Director specializing in SOA, BPM, UML and IBM's SOA-related technologies. He has 12 years experience in the IT field — 2 of those years at Oracle as a Support Analyst and 10 years in Consulting. Jon is a certified IBM SOA Solution Designer, Solutions Developer, Systems Administrator and Systems Expert. Recent speaking engagements include IMPACT on SOA End-to-End Integration in 2007 and 2008, and SOA World Conference on SOA and WebSphere Process Server in 2007.

Tuesday, September 8, 2009

IBM CloudBurst Appliance Part 4: Medium and Large Scale Configurations with Practical Scenarios

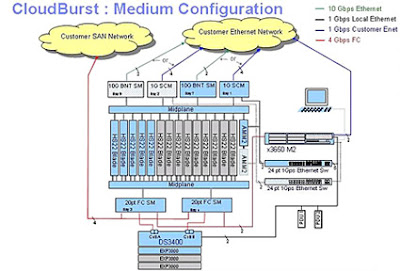

In part 4 of the blog series on IBM CloudBurst device we will discuss basic configurations of the CloudBurst device. Figure 1.0 below shows the CloudBurst device in a medium size configuration. Note that the key interfaces of the device are with the customer storage and Ethernet networks. As we discussed in the previous blog entries, the CloudBurst device is highly modular and can be configured according to customer requirements. The physical configuration showed here serves as an example.

The HS22 blade configuration in the middle is a good representation of the blade center system which starts at 4 blades and can grow to 14 blades to accommodate larger loads. The HS22 blades have embedded flash memory which come pre loaded with ESXi images. Besides that there is no other storage on the HS22 blades. The DS3400 (bottom of the figure) represents storage attached to the blade center and can be grown if required. To connect to their network, the customers can order the device with 1Gb switch module or can opt for 10Gb if its supported on their network. Important to note also is the Fiber connectivity (4 Gbps) offered by the device to connect with the storage blades (DS3400) and to the customer storage network. The x3650 M2 is the management server, which provides management interface to the CloudBurst device administrators.

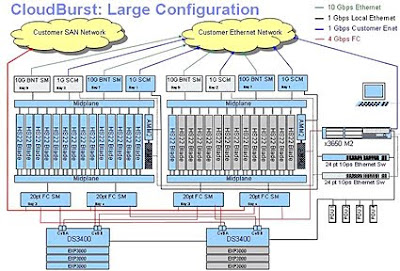

The CloudBurst device can be easily scaled to accommodate larger practical scenarios. Figure 2.0 below provides a representation of the CloudBurst device in a larger configuration.

Note that it looks essentially the same as the medium configuration. The key difference is another blade center array added for more capacity. Note that because of the availability of high speed modular connectivity the two blade centers are connected both at the network level and storage level. The management server x3650 provides a singular management interface for both blade centers/storage units.

Let’s go into more detail on the storage part of the CloudBurst device, since this serves as the customer image repository (VM ware images) and the management software of the virtual machines. By default the device comes with the DS3400 storage unit which has a capacity of 5.4 terabytes with a 4.5 terabyte of usable space. This come fully configured with RAID 5 and 1 hot swap spare drive (in case of failure of the main storage drive). As shown in both figure 1.0 and 2.0 the storage can be expanded with EXP3000 storage expansion units, each having 5.4 terabyte of capacity. So how is the storage typically used? In practical scenarios, the VM management software takes up to 200 Gb space. A 1.2 Tb space can be taken up by customer supplied VM ware images, assuming 100 images at 12 GB each. Another 1 TB of space can be used to back up the customer supplied VM images. Because of the built in highly modular expansion storage mechanism, it is easy to scale for a larger cloud.

In the next part of this series, we will discuss how IBM offering compares to the other competitive offerings including open source cloud computing solutions…stay tuned.

Samuel Sharaf is a Solution Director at Prolifics on the West coast with real world customer expertise with Portal implementations, Dashboard, Forms and Content Management. Sam also has expertise with migrating applications from non-IBM platforms to IBM WebSphere Application and Portal Servers.

Thursday, September 3, 2009

BPM Blueworks - Collaborating in the Cloud

I saw a demo of IBM’s new offering called BPM BlueWorks and was really impressed. It has many capabilities, but I was most excited by the ability to collaborate within the cloud using BPM tools.

As a systems integrator we often help our customers to assess the benefits of a BPM initiative for their organization. We pride ourselves on being able to help the business users within an organization to "simulate and visualize" their end results, dashboards, metrics, etc. before the IT team even begins implementation. A powerful and important step in the process that correctly sets expectations. With BPM BlueWorks I think we will be able to collaborate with the business analysts and users even more effectively… so to conduct workshops where we can create strategy maps, process maps and capability maps together, all within the cloud. We can import information in from, for instance, PowerPoint, modify within the cloud and export artifacts to Modeler.

I look forward to learning more as this rolls out.

Ms. Devi Gupta directs the market positioning for Prolifics and helps manage the company’s strategic alliance with IBM. Under her guidance, Prolifics has made the critical transition from a product and services company to becoming a highly reputable WebSphere service provider and winner of several awards at IBM including the Business Partner Leadership Award, Best Portal Solution, IMPACT Best SOA Solution, Rational Outstanding Solution and Overall Technical Excellence Award.