Tuesday, December 22, 2009

Integrating MQ FTE with WebSphere Process Server/WESB

Tuesday, December 1, 2009

Good design and usability principles

Alex Ivkin, Senior IT Security Architect

I am a big proponent of usability. After all, regardless of how good something is, or how many cool features it has, if it is unusable – it is worthless. A hard to use application, website or in fact anything that interacts with a human, will not be popular, will lose out to competition or be ignored altogether. There are many articles on the web with examples and lists of usability principles, so I would not go into that here.

It seems, however, that many sites, like ss64.com or useit.com, suffer from a common pitfall in usability design, sacrificing design by going too far. They subscribe to the lowest common denominator in an effort to make it usable to the biggest possible crowd. This makes them very plain and downright ugly. Sure, they cover the 99% of the crowd out there, not the 95% a good design would cover, but in the push for these extra 4% they lose much in the beauty and attractiveness.

Ever wondered how Apple design wins praises so much? It’s not only created with usability in mind, it is also very attractive. Good, usable design after all has clues that are beyond simply making it readable or understandable. The clues are like little streaks of color on a bland background that make it come alive, make it stand out and win over a more “usable” background for most of people out there. Combining a creative effort with a usability agenda is the winning combination.

With that in mind here are the good usability design principles:

- Start with a use-case. Run through how you think the users will approach the tasks and navigate through. You will be wrong, but that’s a start.

- Think how it could be simplified. In many cases the simpler is the better. Many designs, like a single hand faucet handle, start off designed for the ease of use with simplicity and then they win over. Assume you are designing for people who are resource constrained: “the less brain I can devote to this task the better”

- Be creative. Think how you can make it more attractive.

- Consider performance. Yes it is a big usability factor.

- Implement and fix bugs (another big usability factor).

- Rinse and repeat.

What you can do to improve it if you have run out of ideas:

- Think about HTTP/XHTML validation and CSS compliance

- Focus on making it understandable by all kinds of colorblind people

- Sprinkle with metadata, image tags and SEOs

An interesting twist to the discussion above was mentioned in a recent Wired article on ‘good enough tech’. The usability principles break down on the economics level somewhat. In other words if something is cheap enough, and usable enough, it will work for the most of us. So, think of where your design fits economically and how would it compete in that niche. If your stuff is cheap, it may work with a cheap design and being somewhat ok to use (think IKEA). If your stuff is free, it may limp by being somewhat unusable. Like this blog.

Alex Ivkin is a senior IT Security Architect with a focus in Identity and Access Management at Prolifics. Mr. Ivkin has worked with executive stakeholders in large and small organizations to help drive security initiatives. He has helped companies succeed in attaining regulatory compliance, improving business operations and securing enterprise infrastructure. Mr. Ivkin has achieved the highest levels of certification with several major Identity Management vendors and holds the CISSP designation. He is also a speaker at various conferences and an active member of several user communities.

Tuesday, November 17, 2009

Human Centric Processes: A Fresh Perspective

With BPM gaining so much attention, any workflow product worth its salt provides a lot of flexibility when it comes to definition and execution of processes specifically when it comes to human centric processes, both structured and unstructured. This flexibility however comes at a cost, cost both in terms complexity and clarity of definition of processes and performance in runtime. Also, making changes to workflows always remains a challenge. Simple changes like making steps execute in parallel instead of sequentially are a nightmare to implement and are most often simply not taken up.

It does not really need to be that complex if we take a somewhat hybrid approach to structured and unstructured processes. There are certain steps that have to happen in a particular order and then there are others that can be done at any time if certain pre-requisites are met. We have to start thinking of processes as steps where each step has a set of pre-requisites. These pre-requisites could be related to the steps in the workflow or can be content / context related. Instead of workflows, human centric processes can be defined as a set of steps with pre-requisites. For eg, a step X can be executed only when Step A and Step D are completed, when the status of a document is accepted and if the flow was initiated by a gold client.

This approach should be extended with user experience simulation to come up with the typical paths the workflow will take and this should be visually depicted. This will provide both clarity in terms of the definition and flexibility to change the workflows in an extremely simplified fashion.

Call it pre-requisites or workflow rules, the idea is to provide extreme dynamicity to human centric processes, an area that has not been addressed by most of the so called dynamic BPM products which cater almost always to system centric processes.

Anant Gupta was recently named the SOA Practice Director at Prolifics after serving as a Senior Business Integration and J2EE architect Anant has with extensive experience in IBM's SOA software portfolio and specializes in delivering business integration and business process management solutions. He has worked for major clients in the banking, insurance, telecommunications and technology industries.

Monday, November 9, 2009

Service Versioning in WSRR

We had heard the word “Change” used a lot in the recent months and I have to agree that “There is nothing more permanent than change”. In the services world, “Change” brings about a unique challenge – “Versioning”. As I enhance my service to add new functionality or update existing logic, I need to create a new version of the service. The reason - Most often, I need to support multiple versions of the same service in my environment as I might have different clients who would like to use different versions of the service.

WebSphere Services Registry and Repository (WSRR) is the place where I store all my service definitions. WSRR allows me to define a version number for a service, i.e. I could have multiple versions of the same service in WSRR.

However what is missing in WSRR is the ability to connect multiple versions of the service. However what WSRR does provide is the flexibility to add metadata to service definitions. I have created two such relationship attributes called – nextVersion and previousVersion and have used them to build a custom way to link multiple versions of a service.

Such a custom relationship allows me to do an impact analysis in my registry to see the following results:

Rajiv Ramachandran first joined Prolifics as a Consultant, and is currently the Practice Director for Enterprise Integration. He has 11 years experience in the IT field — 3 of those years at IBM working as a developer at its Object Technology Group and its Component Technology Competency Center in Bangalore. He was then an Architect implementing IBM WebSphere Solutions at Fireman’s Fund Insurance. Currently, he specializes in SOA and IBM’s SOA-related technologies and products. An author at the IBM developerWorks community, Rajiv has been a presenter at IMPACT and IBM's WebSphere Services Technical Conference.

Tuesday, November 3, 2009

Why Move to Portal 6.1?

Samuel Sharaf, Solution Director West Coast

We recently proposed a WebSphere Portal 6.1 upgrade to one of our very important customers. Their environment is currently running on Portal 6.0.x. The customer’s first question was, “What value does Portal 6.1 provides over version 6.0? And how it would benefit us?” To answer their question, we developed a simple table which lists the features available in the latest version of Portal and a brief description of these features.

These two columns, in table 1.0 below, can be used as basis for developing an ROI model for a customer who wants to upgrade to Portal 6.1. For example, most likely, a customer has developed custom AJAX functionality to enhance the user experience. Yet with any custom code, there is cost associated with code maintenance and enhancements. With Portal 6.1’s Web 2.0 support, most of the Portlets have built in AJAX support.The customer was happy to see how these new features in Portal 6.1 can help reduce the on going code maintenance and administrative costs and help improve the overall site and user experience.

WebSphere Portal 6.1 Features and Descriptions

UI Improvements

- Themes and Skins Wizard

- Themes and skins no longer part of wps.ear. Updates are applied without restarting Portal

- AJAX enabled Portlets

- Supports JSR 268 which provides for improved inter-Portlet communication

- Portal REST services and integration with Collaboration Services

- Portlet Resource monitoring (out of box)

- Simplified administration of sites

- Greatly improved security configuration

- Security:

- Inherited security support

- User and contributor roles

- Active content filtering

- Performance:

- Changes to WCM node structure for better authoring experience/performance

- Presentation:

- Improved UI tags for content rendering

- Authoring/templating:

- In line editing, Authoring tool enhancements, EditLive RTE 5

- API:

- JSR286 Portlets to enable Web content pages and directing links to right WCM Page

Samuel Sharaf is a Solution Director at Prolifics on the West coast with real world customer expertise with Portal implementations, Dashboard, Forms and Content Management. Sam also has expertise with migrating applications from non-IBM platforms to IBM WebSphere Application and Portal Servers.

Tuesday, October 27, 2009

OpenID Vulnerabilities

OpenID is an identity sharing and a single sign on protocol, that is becoming more and more popular on the net. OpenID allows us to use a single authenticating source (aka an identity provider) to login into any site that accepts OpenIDs (aka a service provider) without the need to create an account on that site. Yahoo!, Google, AOL, SourceForge, Facebook and many others now support it now. A great idea, but unfortunately it comes with some big holes.

What OpenID means, in an essence, is that you are entrusting all your account accesses to a single source. You trust your identity provider to safeguard your personal information until you decide to use it. So, to no surprise, most of the attack vectors are targeting this trust relationship.

Spoofing an identity provider

If you use one of the common identity providers, say myopenid.com, you need to be aware of identity phishers. An attacker could devise a site, that, after asking you to login with an OpenID, sends you to a myopenid-look-a-like.com. You, trustingly, enter your OpenID login information, and, boom, your id and your password that opens access to all you OpenID accounts are in the wrong hands.

The switch user attack

If you are one of the paranoid types and host your own identity provider, say via a Wordpress OpenID plugin, you may succumb to a URL hijacking technique. If attackers gain an ability to modify pages on your site (PHP is great at that), they then could modify the headers on your pages to redirect openid validation requests to their own identity provider. With the redirect configured, when they log in into a service provider with your OpenID URL, the service provider will authenticate against attackers’ own identity provider, thus making them appear as you, anywhere they go. We’ve proven this scenario on our host, and it is very viable and very scary.

OpenID URL hijacking

Another set of attacks targets the OpenID URL. An Open ID URL is your unique identifier on the net to the service providers. If someone gains control over the URL, either due to DNS manipulation (google DNS attacks) or site hacking, they have a key to all your accounts. An example would be to trick a service provider into resolving your OpenID URL to an attacker’s site that uses attacker’s identity provider, thus making the service provider trust an attacker, posing under the URL of the victim. The use of i-Numbers in lieu of URL’s is supposed to help with this issue, but they are not yet widely supported.

Cross site request forgeries

OpenID does not validate all of the traffic going between the identity provider and service provider in a user browser via hidden i-frames. A malicious site could supply your browser could with a page that, knowing your openid from the cookies, could determine your identity provider name and automate actions to any number of service providers, acting on your behalf. The actions could range from creating accounts under your name to divulging details of your existing accounts on these sites. Secunia provided detailed research on this type of the XSS.

Automation attacks

OpenID sign on process makes it really easy for automated processes to login or create accounts on the fly. A spammer could create an identity provider validating its own id’s at a rate of hundreds a second and then supply them to the service providers. This could be mitigated by pairing an openid field with a captcha field, but it is not supported by most OpenID service providers right now.

Security holes

Yes, there are bugs, both in the specifications and the technical implementations. I would not go in to details here, since these are typically short lived and are addressed by the vendors in an on-going basis. The holes are exploited by the hackers and are expected for any new technology appearing on the web. The problem is that the stake with OpenID is a lot higher. Loosing an OpenID means not only losing your ID but also losing a multitude of accounts and associated personal information.

OpenID keeps your ID off your hands and on the net, the place that you have no control over. I am sure, current OpenID providers will work hard to make sure they are well protected to retain your trust, but rest assured, there will be breaches. Identity provides are very attractive targets to hackers, since they act as gateways to a wide array of accounts. And when this happens all your accounts are potentially lost, not just one. Thus, OpenID should be treated as a convenience, not a way to increase security of your accounts. From another perspective, assuming Linus’ law holds, I do not see OpenID going the Microsoft Passport way. OpenID has its advantage in being open and freely available.

Nonetheless, until OpenID is mature from the security prospective, like SSL and GPG, I am sticking with managing my accounts in an encrypted web browser’s password store. It’s almost as convenient and a lot better protected. After all, you keep your driver’s license in your own wallet, not posted on the web.

Alex Ivkin is a senior IT Security Architect with a focus in Identity and Access Management at Prolifics. Mr. Ivkin has worked with executive stakeholders in large and small organizations to help drive security initiatives. He has helped companies succeed in attaining regulatory compliance, improving business operations and securing enterprise infrastructure. Mr. Ivkin has achieved the highest levels of certification with several major Identity Management vendors and holds the CISSP designation. He is also a speaker at various conferences and an active member of several user communities.

Monday, October 19, 2009

Planning and Scheduling SOA/BPM Development - DO NOTS!

As the momentum and understanding of BPM and SOA has increased, the projects have followed. IBM WebSphere Process Server and ESB (WPS/WESB) are common products that organizations start with when moving towards BPM/SOA/Web services. Many organizations are new to this type of SDLC. This discussion is in the context of my experience on WPS/WESB projects and certainly can be applied to other workflow and ESB products/technologies.

DO NOT:

- Delay Data Model and Data Design efforts

- Plan integration validation between systems/apps/services scheduled toward the end of the project

- Assume a Sr. Developer with no experience on WPS/WESB will design/develop a functioning application

DO NOT plan integration validation between systems/apps/services toward the end of the development SDLC. On one recent project the customer was not familiar with WPS/WESB or integration projects in general. They planned all their integration testing toward the end of the SDLC in the Testing Phase. I am not saying integration testing shouldn’t be done in the testing phase but it should NOT be planned at the end of the SDLC. A common project plan will include a ‘Vertical Slice’, ‘Prototype’, ‘Wire-frame’ or whatever term you are familiar with, the has goal to validate the integration of the various systems early on in the SDLC.

DO NOT assume a Sr. Developer with no experience on WPS/WESB will design and develop a functioning platform. WPS/WESB are enterprise platforms that have multiple layers of technologies (e.g. Java, JEE, BPEL, WS, XML, XSLT, etc…). As a proud successful Sr Developer you may very well be able to create an application on these platforms that functions in non-production environment. However, there are number of nuisances that impact performance that should be addressed by design patterns depending on the requirements. Large business is one concern that comes to mind. Acceptable object size is dependent on business transaction volume, CPU Architecture, RAM, HEAP and other dependencies. Design patterns to deal with large business objects can be applied thus giving you better performance.

WPS/WESB is a product I’ve worked with extensively. It has seen major enhancements and improvements on usability/consumability but this doesn’t mean anyone can create a well functioning app.

Jonathan Machules first joined Prolifics as a Consultant, and is currently a Technology Director specializing in SOA, BPM, UML and IBM's SOA-related technologies. He has 12 years experience in the IT field — 2 of those years at Oracle as a Support Analyst and 10 years in Consulting. Jon is a certified IBM SOA Solution Designer, Solutions Developer, Systems Administrator and Systems Expert. Recent speaking engagements include IMPACT on SOA End-to-End Integration in 2007 and 2008, and SOA World Conference on SOA and WebSphere Process Server in 2007.

Wednesday, October 14, 2009

Building an Enterprise Application Integration Solution

Rajiv Ramachandran, Practice Director, Enterprise Integration / Solution Architect

Having an integration infrastructure that connects all enterprise systems to one another and provides seamless and secure access to customers, partners, and employees is the foundation of a successful enterprise. I have been involved with a lot of customers discussing their EAI architectures. More often than not, I have noticed that the approaches considered to implement such an architecture are not complete and do not provide the benefits that may be achieved with a well connected enterprise. In this blog entry, I would like to highlight aspects that need to be considered to build an end-to-end integration solution. (Note: This blog entry will not get into the details on how to implement each of these areas, which would result in me writing a book J.)

- Connectivity – Avoiding point-to-point connectivity and ensuring that you have loosely coupled systems is key to ensuring that your EAI architecture is flexible and can scale.Use an ESB as the heart of you EAI architecture and ensure that your ESB has support for all major protocols (HTTP, SOAP, JMS, JCA, JDBC, FTP, etc.) and comes with adapters for common enterprise applications like SAP, Oracle, Siebel, PeopleSoft, etc.

- Patterns - Build a pattern based integration solution. The following is an excellent paper that outlines some of the common patterns used in the integration space:http://www.ibm.com/developerworks/library/ws-enterpriseconnectivitypatterns/index.html

- Data - Data is of great significance when it comes to integration. Different systems have different data formats and there are common items to consider that can help you deal with these differences:

- Define canonical data formats and ensure that you have mapping from application specific formats to canonical formats. Understand the various data formats that exist in your enterprise today and evaluate what it will take to map and manage complex data formats.

- EDI data is common in many enterprises and will require special handling.

- Define a strategy for handling reference data, how lookups can be done against this data and how reference data can be maintained.

- Define rules around both syntactic and semantic validation of messages. Do not over do validation as you will pay a price when it comes to performance. Be judicious in what you want to validate and where.

- Monitoring - An aspect that is often overlooked when building integration solutions is monitoring. Ensure that you have the right framework(s) in place to monitor your integrations and service components. You will need the ability to monitor every protocol the ESB supports. You also need to couple monitoring with notifications and error handling. You will need a strategy for auditing your messages. Again, as in the case with validations, be judicious in what you want to audit and when. There is a price to pay. Define a centralized service for auditing requirements and ensure that all integration components make use of this service.

- Security - Security is the most critical part of implementing an integration solution. I have to say that most customers realize that security is important but sometimes, because of a lack of expertise in how to correctly secure their solution, the end result is a solution that is way more costly and in fact less secure than desired. Some considerations are:

- Define your security requirements – authentication, authorization, encryption, non-repudiation, etc.

- Decide what aspect(s) can be supported by transport level security and when you need message level security.

- Decide where hardware components can be used to better implement security than software components.

- Use open standard protocols so that you can easily integrate with different systems – both internal and external.

- Service Oriented Integration – With the adoption of SOA, one of the key architectural models used for integration is to connect to service interfaces that are exposed by various systems. With this architecture, it is also now possible to choose what service / functionality you use dynamically at runtime. Another aspect that SOA has brought to the integration world is a policy-driven approach to integration – that is, data that is being passed to a system or between systems is used to check what policy needs to be applied at runtime to determine what service to use and what actions to perform (auditing, validation, encryption etc.). Integration coupled with SOA introduces another component into the EAI architecture – a registry and repository where services and policies are cataloged and can be used to bring about dynamic, policy-driven behavior.

This confluence of pattern based connectivity, data handling, monitoring, security, and service-oriented integration can provide you with a well-connected enterprise that can respond quickly to changing business needs.

Rajiv Ramachandran first joined Prolifics as a Consultant, and is currently the Practice Director for Enterprise Integration. He has 11 years experience in the IT field — 3 of those years at IBM working as a developer at its Object Technology Group and its Component Technology Competency Center in Bangalore. He was then an Architect implementing IBM WebSphere Solutions at Fireman’s Fund Insurance. Currently, he specializes in SOA and IBM’s SOA-related technologies and products. An author at the IBM developerWorks community, Rajiv has been a presenter at IMPACT and IBM's WebSphere Services Technical Conference.Tuesday, October 6, 2009

Why SOA Governance?

One of the buzzwords that followed the introduction of SOA was “Governance”. It was interesting to see how every aspect of a new project initiative now began to be tagged with this word. All of a sudden there were - project governance, architectural governance, infrastructure governance and so on. The real essence of what “SOA Governance” was or why “Governance” was important in the context of an SOA was lost.

I am not denying that governance is essential in every aspect of business and IT. But what I want to focus on this blog is about SOA Governance.

Services have been there all along in the technology space but the advancement in SOA and its adoption started when both customers and vendors came together to define a standard way to describe a service. It then became possible to implement this description in a programming language of choice, be able to deploy the service across diverse platforms and still be able to communicate across platform and language boundaries. With this form an SOA revolution, reusing services became much easier and with reuse came a unique set of challenges.

My business depends on service that I

- Did not write,

- Do not own,

- Cannot control who will make changes to it and when,

- Don’t know whether it will provide me with the qualities of service that I desire

What a SOA Governance model does, is bring uniformity and maturity in defining Service Ownership, Service Lifecycle, Service Identification & Definition, Service Funding, Service Publication & Sharing, Service Level Agreement etc. and thus provide a solution to otherwise what would have become a Service Oriented Chaos.

So next time when you talk about SOA Governance think about some of the above defined areas that pertain to an SOA and how you can align – Process, People and Products to achieve an SOA Governance solution that ensures that your SOA provides real business value

In the next set of blog entries I will focus on how IBM WebSphere Registry & Repository product helps with SOA Governance.

Rajiv Ramachandran first joined Prolifics as a Consultant, and is currently the Practice Director for Enterprise Integration. He has 11 years experience in the IT field — 3 of those years at IBM working as a developer at its Object Technology Group and its Component Technology Competency Center in Bangalore. He was then an Architect implementing IBM WebSphere Solutions at Fireman’s Fund Insurance. Currently, he specializes in SOA and IBM’s SOA-related technologies and products. An author at the IBM developerWorks community, Rajiv has been a presenter at IMPACT and IBM's WebSphere Services Technical Conference.

Tuesday, September 29, 2009

IBM CloudBurst Part 5: IBM CloudBurst Offering in Comparison to other Cloud Offerings

In the previous blog entries we discussed the IBM offering for establishing private clouds i.e. the CloudBurst device and its configurations. In this final part of the blog series, I will discuss the other cloud computing options, specifically open source and how they compare to the IBM offering.

Besides IBM, the other big players in the cloud computing domain are Amazon, Sun (Oracle), Google, SalesForce, etc. The Amazon EC2 (elastic compute cloud) offers a very flexible cloud computing solution for public clouds; offering both open source as well as vendor specific technologies. Before we start the comparison of the offerings; let’s revisit the architectural service layers of cloud computing. The architectural services model of cloud computing can be viewed as a set of 3 layers viz. applications, services and infrastructure.

Layer 1 – SAAS (Software as a service) e.g. Sales Force CRM application

Layer 2 – PAAS (Platform as a service) e.g. XEN image offered by Amazon

Layer 3 – IAAS (Infrastructure as a service) e.g. e.g. Amazon EC2 and S3 services

The IBM CloudBurst device basically offers all 3 layers of cloud computing in a single device for establishing a private cloud. As far as public cloud is concerned, IBM offers several SAAS services e.g. IBM Lotus Live Notes, Events, Meetings, and Lotus Connections etc.

So how does an open source cloud computing model look like? At the platform layer level, companies like Sun Microsystems are offering solutions built around open source Apache, MySQL, PHP/Perl/Python (AMP) stack. Open source communities are very actively developing solutions catered for cloud computing, in fact, cloud computing is acting as a catalyst for the development of agile new open source products like lighttpd (an open source web server), Hadoop, the free Java software framework that supports data-intensive distributed applications; and MogileFS, a file system that enables horizontal scaling of storage. However, a cloud computing solution based on open source is yet to be adopted by early adapters of cloud computing. As Tim O’Reilly, CEO of O’Reilly Media, and others have pointed out, open source is predicated on software licenses, which in turn are predicated on software distribution — and in cloud computing, software is not distributed; it’s delivered as a service over the Web. So cloud computing infrastructures and the modifications to the open-source technologies that enable them tend not to be available outside the cloud vendors’ datacenters, potentially locking their users in to a specific infrastructure.

Although the software stacks that run on top of these cloud computing infrastructures could be predominantly open source, the APIs used to control them (such as those that enable applications to provision new server instances) are not entirely open, further limiting developer choice. And cloud computing platforms that offer developers higher-level abstractions such as identity, databases, and messaging, as well as automatic scaling capabilities (often referred to as “platform as a service”), are the most likely to lock their customers in. Without open interfaces linking the variety of clouds that will exist — public, private, and hybrid — practical use cases will be difficult or impossible to deliver with open source technologies.

IBM’s offering for setting up private clouds, though completely vendor based, does offer a one stop solution which is scalable and dynamic.

Samuel Sharaf is a Solution Director at Prolifics on the West coast with real world customer expertise with Portal implementations, Dashboard, Forms and Content Management. Sam also has expertise with migrating applications from non-IBM platforms to IBM WebSphere Application and Portal Servers.

Monday, September 21, 2009

Integrating WSRR (WebSphere Registry and Repository) and RAM (Rational Asset Manager)

One of the challenges we are facing as part of a current project that we are working on is to demonstrate the integration of WSRR (WebSphere Registry and Repository) and RAM (Rational Asset Manager).

First and foremost there are a lot of questions on the roles and responsibilities of both these products.

- Do we need both products?

- If so, what do we use RAM for and what do we use WSRR for?

- What is an asset?

- Is a service an asset? Can there be other assets other than services? Does that mean only service assets go into WSRR?

- What is a service? Is it just a WSDL? What about the service code? Should that also go into WSRR?

- How do these products work with one another?

- When I make a change in RAM does it reflect in WSRR and vice versa?

There are more questions than answers. I don't have answers to all these questions and as we work on this project, I am sure we will learn more. The team will post more answers here on this BLOG.

Today we took the first step. How can we get WSRR and RAM connected? I should tell you to my surprise I found that it was not difficult to accomplish this.

We had a RAM instance (v7.1) running on a VM Image.

I installed WSRR v6.2 on the same image and since it was for a POC, I used the Derby DB for WSRR. (I had installed WSRR with a DB2 backend and it had worked fine.)

There were just 2 things I needed to do to get the connectivity working

- RAM runs on a WAS instance. WSRR was running on a separate WAS instance. The communication between the two was over HTTPS. So I had to ensure that WSRR server certs were part of the trust store of the WAS server running RAM. Nothing RAM / WSRR specific here, pure WebSphere stuff.

- Log into the RAM WAS console using the following URL (https://localhost:13043/ibm/console/logon.jsp)

- Go to Security -> SSL certificate and key management > Trust managers > Key stores and certificates > NodeDefaultTrustStore > Signer certificates

- Do Retrieve from Port, Provide the following values: Host -> localhost, Port 9443, Alias WSRR

- Log out

We needed to set the configuration in the RAM Admin Console to connect to WSRR.

- Log into the RAM UI as admin

- Go to the admin tab, click on 'Community Name'

- Go to Connections

- Add a WSRR Connection

- Call in LOCAL WSRR

- The URL to provide is https://localhost:9443/

- User id and password is admin / admin

- Do 'Test Connection' and that should be successful

- Click on Synchronize and you should see the message -> WSRR-Asset Manager synchronization started

That’s it. Now I was able to search in RAM and have visibility into assets that were in WSRR. This was a simple test. These were stand alone instances . I assume when we do have clustered configurations we may have some challenges but it was a pleasant surprise to see something work the first time you try it.

Rajiv Ramachandran first joined Prolifics as a Consultant, and is currently the Practice Director for Enterprise Integration. He has 11 years experience in the IT field — 3 of those years at IBM working as a developer at its Object Technology Group and its Component Technology Competency Center in Bangalore. He was then an Architect implementing IBM WebSphere Solutions at Fireman’s Fund Insurance. Currently, he specializes in SOA and IBM’s SOA-related technologies and products. An author at the IBM developerWorks community, Rajiv has been a presenter at IMPACT and IBM's WebSphere Services Technical Conference.

Monday, September 14, 2009

Wading Through Requirements on SOA/BPM/BAM Projects!

On a recent project I was heavily involved in the requirements phase of SOA/BPM/BAM project. Requirements elicitation in its own right can be a challenge. On large projects with large teams and un-clear methodologies, approaches and tools/technologies, the issue is exacerbated.

Now throw in this whole BAM concept and things really get confusing. What I found is that BAM requires a customized approach for requirements elicitation. BAM requirements are all about the performance metrics of the business. But it is very easy for the Business Analysts and Business Owners to confuse the high level and low level requirements with design. It seems this is more of an issue with BAM due to the nature of the requirements. However, the Business Analysts and Business Owners need to focus on the scope of the requirements and the dimensions, NOT the design. When the business side elaborates on how they want to measure their business, there is a delicate balance between the calculations used to create the metrics and KPIs (Key Performance Indicators) themselves and the calculations, measures and metrics used to fulfill the requirement. Many times the business side not only gives you a description of the KPIs that they want to track, they also start to tell you where to gather data and how to calculate. This often imposes restrictions on the design that is the responsibility of the S/A and Designer.

In the end, we found that we had created a specialized approach for this project. We incorporated some specific BAM Specification and Design documents to help everyone understand the process and supply the necessary information for the BAM design team.

Some Definitions:

BAM (Business Activity Monitoring) - Gives insight into the performance and operation of your business processes real-time.

KPIs (Key Performance Indicators) - Significant measurements used to track performance against business objectives.

Monitor Dimensions - Data categories that are used to organize and select instances for reporting and analysis.

Requirements Dimensional Decomposition – The narrowing of requirements to the point of being clear, concise and unambiguous.

Metric - A holder for information, usually a business performance measurement. A metric can be used to define the calculation for a KPI, which measures performance against a business objective.

Jonathan Machules first joined Prolifics as a Consultant, and is currently a Technology Director specializing in SOA, BPM, UML and IBM's SOA-related technologies. He has 12 years experience in the IT field — 2 of those years at Oracle as a Support Analyst and 10 years in Consulting. Jon is a certified IBM SOA Solution Designer, Solutions Developer, Systems Administrator and Systems Expert. Recent speaking engagements include IMPACT on SOA End-to-End Integration in 2007 and 2008, and SOA World Conference on SOA and WebSphere Process Server in 2007.

Tuesday, September 8, 2009

IBM CloudBurst Appliance Part 4: Medium and Large Scale Configurations with Practical Scenarios

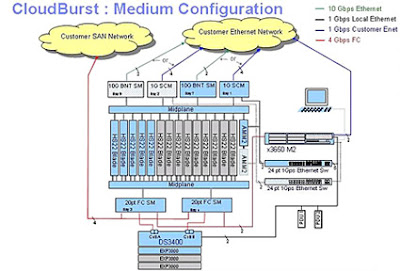

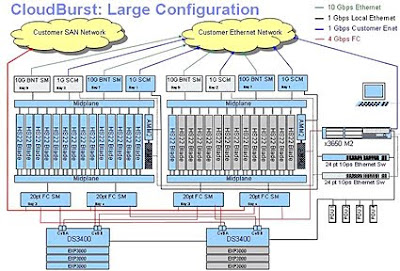

In part 4 of the blog series on IBM CloudBurst device we will discuss basic configurations of the CloudBurst device. Figure 1.0 below shows the CloudBurst device in a medium size configuration. Note that the key interfaces of the device are with the customer storage and Ethernet networks. As we discussed in the previous blog entries, the CloudBurst device is highly modular and can be configured according to customer requirements. The physical configuration showed here serves as an example.

The HS22 blade configuration in the middle is a good representation of the blade center system which starts at 4 blades and can grow to 14 blades to accommodate larger loads. The HS22 blades have embedded flash memory which come pre loaded with ESXi images. Besides that there is no other storage on the HS22 blades. The DS3400 (bottom of the figure) represents storage attached to the blade center and can be grown if required. To connect to their network, the customers can order the device with 1Gb switch module or can opt for 10Gb if its supported on their network. Important to note also is the Fiber connectivity (4 Gbps) offered by the device to connect with the storage blades (DS3400) and to the customer storage network. The x3650 M2 is the management server, which provides management interface to the CloudBurst device administrators.

The CloudBurst device can be easily scaled to accommodate larger practical scenarios. Figure 2.0 below provides a representation of the CloudBurst device in a larger configuration.

Note that it looks essentially the same as the medium configuration. The key difference is another blade center array added for more capacity. Note that because of the availability of high speed modular connectivity the two blade centers are connected both at the network level and storage level. The management server x3650 provides a singular management interface for both blade centers/storage units.

Let’s go into more detail on the storage part of the CloudBurst device, since this serves as the customer image repository (VM ware images) and the management software of the virtual machines. By default the device comes with the DS3400 storage unit which has a capacity of 5.4 terabytes with a 4.5 terabyte of usable space. This come fully configured with RAID 5 and 1 hot swap spare drive (in case of failure of the main storage drive). As shown in both figure 1.0 and 2.0 the storage can be expanded with EXP3000 storage expansion units, each having 5.4 terabyte of capacity. So how is the storage typically used? In practical scenarios, the VM management software takes up to 200 Gb space. A 1.2 Tb space can be taken up by customer supplied VM ware images, assuming 100 images at 12 GB each. Another 1 TB of space can be used to back up the customer supplied VM images. Because of the built in highly modular expansion storage mechanism, it is easy to scale for a larger cloud.

In the next part of this series, we will discuss how IBM offering compares to the other competitive offerings including open source cloud computing solutions…stay tuned.

Samuel Sharaf is a Solution Director at Prolifics on the West coast with real world customer expertise with Portal implementations, Dashboard, Forms and Content Management. Sam also has expertise with migrating applications from non-IBM platforms to IBM WebSphere Application and Portal Servers.

Thursday, September 3, 2009

BPM Blueworks - Collaborating in the Cloud

I saw a demo of IBM’s new offering called BPM BlueWorks and was really impressed. It has many capabilities, but I was most excited by the ability to collaborate within the cloud using BPM tools.

As a systems integrator we often help our customers to assess the benefits of a BPM initiative for their organization. We pride ourselves on being able to help the business users within an organization to "simulate and visualize" their end results, dashboards, metrics, etc. before the IT team even begins implementation. A powerful and important step in the process that correctly sets expectations. With BPM BlueWorks I think we will be able to collaborate with the business analysts and users even more effectively… so to conduct workshops where we can create strategy maps, process maps and capability maps together, all within the cloud. We can import information in from, for instance, PowerPoint, modify within the cloud and export artifacts to Modeler.

I look forward to learning more as this rolls out.

Ms. Devi Gupta directs the market positioning for Prolifics and helps manage the company’s strategic alliance with IBM. Under her guidance, Prolifics has made the critical transition from a product and services company to becoming a highly reputable WebSphere service provider and winner of several awards at IBM including the Business Partner Leadership Award, Best Portal Solution, IMPACT Best SOA Solution, Rational Outstanding Solution and Overall Technical Excellence Award.

Monday, August 24, 2009

IBM CloudBurst Appliance Part 3 – Logical Architecture and Physical Topology Scenario

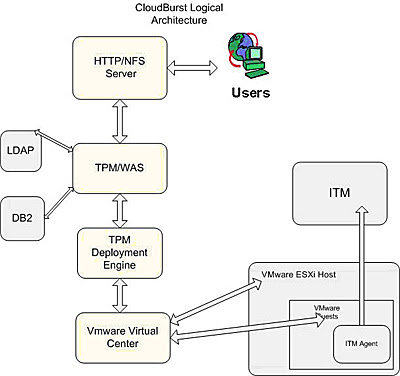

Figure 1.0 – Logical Architecture

A quick glance at the logical architecture reveals that the key components come from the Tivoli, WebSphere and VMware stacks. The first block in the overall stack is the IBM HTTP Server which handles the user requests. The HTTP Server forwards the user requests to the Tivoli Provisioning Manager and the WebSphere software stack, which form the heart of the overall architecture and run the IBM cloud-computing software. The HTTP Server requests are handled by the TPM and the stack of Cloud computing software that is running on the WebSphere Application Server. The Tivoli Provisioning Manager makes use of the pre-built automation workflows and set of scripts which actually drive the self-service model which serves the VMs requested by the end user. The Cloud computing software also handles any errors and exceptions. The data of the TPM and the WAS Cloud computing software (blue code) is stored in the DB2, while user info is stored in the LDAP. The TPM deployment engine acts as a broker and fulfills the end user requests. Its running set of automation packages and scripts.

The TPM deployment engine makes automation and web service calls to the VMware virtual center. The VM VC manages all the hypervisors and virtual machines which are running on base hardware. The VM VC interacts with physical machines. The physical machine is running a hypervisor – a light-weight process that runs the actual VMs which the end users will be accessing. The VC also provisions and performs customization on VMs.

ITM provides monitoring of the VMs and a dashboard for Cloud Admins to monitor the VMs and the VMware stack.

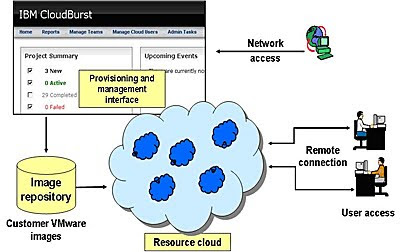

Let’s now consider a physical topology demonstrating a practical usage of the CloudBurst device. Figure 2.0 below illustrates how different users interact with the ‘Cloud’ to request resources. The ‘Resource Cloud’ is an abstraction representing the CloudBurst appliance, which can be used to create a private cloud. The resources in this case are VMware images which are provided by the customer and cataloged and stored in the image repository. A typical example would be developers working on WebSphere Portal development requiring VMware images which provide a rational development environment along with a Portal Server test instance.

Figure 2.0 – Logical View/Scenario

The figure also illustrates the CloudBurst browser-based interface, which provides provisioning and management of the Cloud Resources. There are two basic categories of users: end users (software developers and quality assurance engineers) and administrators. The Resource Cloud provides a self-servicing platform for end users to request and use resources. The administrators use the browser-based interface to manage resources and users.

In the next part of this series, we will explore medium and large scale deployment topologies of the CloudBurst appliance, scalability, network interfaces and add-on storage devices.

Monday, August 10, 2009

Service Gateway Pattern in WebSphere Enterprise Service Bus (WESB) 6.2

On of the new patterns that have been introduced in v6.2 of WESB is the Service Gateway Pattern. There are two implementations of this pattern – A Static Gateway and A Dynamic Gateway. In this blog entry I will focus on my experiences on using the Dynamic Service Gateway pattern.

One of the basic requirements that I expect in an ESB is the ability to introduce an ESB in the middle between a client and a service (without impacting either the client or the service) and be able to do provide value added services like logging, monitoring, auditing etc. This is exactly what we are able to accomplish with the Dynamic Service Gateway pattern. This gateway pattern is implemented as a mediation component. The interface for this mediation component has 2 operations, a request operation and a request response operation. Both these operations accept xsd:anyType (they are not bound to the type definitions exposed by any particular service). The reference partner for this mediation also maps to the same interface. The reason this pattern is dynamic, is because there is no coupling to services at compile time. You can dynamically set the service endpoint at runtime and invoke that service.

However what needs to be understood is that this pattern implementation works only for SOAP 1.2 based JAX WS Web Services. The reason why this restriction exists is because in JAX-RPC the invocation was RPC based -> a particular web service operation had to be specifically invoked. In JAX-WS the invocation is more messaging based. There is no specific operation invocation required. The following article series provide an in depth analysis of the differences between JAX-RPC and JAX-WS.

http://www.ibm.com/developerworks/library/ws-tip-jaxwsrpc4/index.html

The following diagrams show a sample implementation of this pattern for monitoring and auditing service invocations.

Assembly Diagram

Look up Table

Rajiv Ramachandran first joined Prolifics as a Consultant, and is currently the Practice Director for Enterprise Integration. He has 11 years experience in the IT field — 3 of those years at IBM working as a developer at its Object Technology Group and its Component Technology Competency Center in Bangalore. He was then an Architect implementing IBM WebSphere Solutions at Fireman’s Fund Insurance. Currently, he specializes in SOA and IBM’s SOA-related technologies and products. An author at the IBM developerWorks community, Rajiv has been a presenter at IMPACT and IBM's WebSphere Services Technical Conference.

Monday, August 3, 2009

Dynamic Routing using WebSphere Enterprise Service Bus (WESB)

One of the most common requirements I have heard from customers who are using an ESB is to say that I want to dynamically route information to several of my end systems. I don't want to change my code when i introduce a new system to the mix that requires the same data.

Careful analysis is required to implement a solution to this requirement as there are two parts to this.

1. The ability to invoke an end point dynamically (the requirement statement is crystal clear about that).

2. The second part is how do I define my routing logic? How do i describe it? How can i change it at runtime?

Setting the endpoint header dynamically on the endpoint is supported by the Service Component Architecture (SCA) framework and therefore we do have an easy solution to the first part of the requirement. However there is no standard primitive or a defined approach in the WESB product to achieve the second aspect of the dynamicity requirement.

There are multiple solutions to this

1. We can use WebSphere Business Fabric (WBSF) to provide this capability.

2. Or we can build custom solutions to achieve this goal.

One such solution approach that we have used is to use Business Rules component in WebSphere Process Server (WPS) to be a 'Routing Controller' and define business rules to describe the routing logic. The out of the box capability of business rules in WPS provides us with an editor to edit to these rules and dynamically update them at runtime. The pros of this approach are that we don't have to custom code any of the logic and we are able to use the out of the box rules component. However, since the business rules components are part of WPS, this solution is not applicable for pure WESB customers.

Rajiv Ramachandran first joined Prolifics as a Consultant, and is currently the Practice Director for Enterprise Integration. He has 11 years experience in the IT field — 3 of those years at IBM working as a developer at its Object Technology Group and its Component Technology Competency Center in Bangalore. He was then an Architect implementing IBM WebSphere Solutions at Fireman’s Fund Insurance. Currently, he specializes in SOA and IBM’s SOA-related technologies and products. An author at the IBM developerWorks community, Rajiv has been a presenter at IMPACT and IBM's WebSphere Services Technical Conference.

Tuesday, July 28, 2009

IBM CloudBurst Appliance Part 2 – What’s inside the Box?

In the first blog entry on this topic, I talked about IBM offerings for cloud computing (i.e. the CloudBurst appliance) and discussed its high level overview and capabilities. In this second part I will discuss the value proposition of the device and explore the technical architecture of the device - mainly what it consists of, or what you are buying when you spend more than 200k to buy one.

So again, what is CloudBurst? CloudBurst is a service delivery platform which consists of prepackaged and pre-configured servers, storage, networking, and software needed to set up a private cloud. These resources (hardware and software) can be provisioned and enabled to provide virtual server resources for application development, testing, and other activities that normally have to wait on physical hardware to be procured and deployed.

An important question to ask here is, what value this device is going to provide. In today’s market customers are largely investing in two categories of solutions, one which provides efficiency in the data centers across their IT organizations and secondly, solutions which help them differentiate from their competitors. Typically, IT data centers spend 30% to 50% of resources in developing, testing and configuring environments.

Some time it takes months to establish data center environments and configure them to be consistent with the requirements. With CloudBurst, a developer can log into a self service portal, select resources required and timeframe, select an image to provision from the service catalog, and be ready to go in minutes as opposed to months. So the idea is that if the efficiency of the data centers improve by the use of CloudBurst device, the available resources can focus and spend time on innovating products which differentiate them from competition.

So how does CloudBurst device accomplish this? To answer this, we have to look inside the box. Unlike DataPower devices which are hardware devices built for specific purposes (e.g. xml acceleration, security, integration etc), CloudBurst actually consists of several different hardware devices/components which are pre built and packaged for specific architecture needs and cloud requirements. A typical CloudBurst device (base configuration) consists of:

- 1 42U rack

- 1 3650M2 Systems Management Server

- 1 HS22 cloud management blade

- 1 BladeCenter H chassis with redundant Ethernet and Fibre Channel switch modules

- 3 managed HS22 blades

- DS3400 FC attached storage

Some important things to note here are, the 3650M2 management server hosts the pre packaged software stack (discussed below) and the HS22 blade hosts the IBM Blue Cloud computing software. The 3 managed HS22 blades hosts the client provided VM ware images, which can be cataloged for on demand provisioning. I won’t go into details of each of the individual hardware components (networking, storage etc) here in this blog as their description can be found on IBM website.

The device also comes pre packaged with IBM software, which includes:

- Systems Director 6.1.1 with BOFM, AEM; ToolsCenter 1.0; DS Storage Manager for DS4000 v10.36; VMware VirtualCenter 2.5 U4; LSI SMI-S provider for DS3400

- VMware ESXi 3.5 U4 hypervisor on all blades

- Tivoli Provisioning Manager v7.1

- DB2 ESE 9.1; WAS ND 6.1.0.13; TDS 6.1.0.1

- Special purpose customized portal and appliance wizard that enables client portal interaction

- Tivoli Monitoring v6.2.1

- OS pack

Note that it includes third party software from VMware (virtual center and ESX hypervisor) and IBM cloud computing software which makes use of Tivoli provisioning software components. An interesting point to note is that even though the CloudBurst device consists of several hardware and software components, it is sold, delivered and supported as a single product.

In Part 3 of this blog series, I will discuss the logical architecture of the CloudBurst device and a practical scenario which demonstrates its usage in a real client environment.

Samuel Sharaf is a Solution Director at Prolifics on the West coast with real world customer expertise with Portal implementations, Dashboard, Forms and Content Management. Sam also has expertise with migrating applications from non-IBM platforms to IBM WebSphere Application and Portal Servers.

Monday, July 20, 2009

IBM CloudBurst Appliance – Part I

Having lived and breathed in the IBM technology world for the last 10 years, I was intrigued when IBM made the announcement a few months ago (June 16th, 2009) about the CloudBurst appliance. The name of the appliance, CloudBurst, was an interesting one and what it could do almost sounded like magical. A device which can bring together hardware, software and services needed to establish a private cloud? Sounded too good to be true. Obviously, the name of the appliance suggested that It had to do something with cloud computing – a concept which is fast gaining popularity.

As part of our technology group initiative, I decided to take a deep dive into understanding the appliance, its capabilities as they relate to cloud computing and IBM technology, and ultimately how we can we position it to our customers.

In this first part of blog series on CloudBurst, I will share the device overview and its general capabilities at high level. The subsequent blogs will go in more depth in describing its practical scenarios, architecture and real world usage.

IBM CloudBurst provides everything you need to start delivering services much faster than you do today, while reducing costs and providing the benefits of a dynamic infrastructure. It is a pre-packaged private cloud offering that integrates the service management system, server, storage and services needed to establish a private cloud. This offering takes the guess work out of establishing a private cloud by pre-installing and configuring the necessary software on the hardware and leveraging services for customization to your environment. All you need to do is install your applications and start leveraging the benefits of cloud computing, like virtualization, scalability and a self server portal for provisioning new services.

Summarizing the capabilities:

- A service delivery platform that is pre-integrated at the factory

- Built-for-purpose based on the architectural requirement of specific workloads

- Delivered and supported as a single product

- Prepackaged, pre-configured servers, storage, networking, software and installation

- services needed to stand up a private cloud

IBM CloudBurst includes everything from a Self-service portal that allows end users to request their own services and improve service delivery, automation to provision the services and virtualization to make system resource available for the new services thus reducing costs significantly. This is all delivered through the integrated pre-packaged IBM CloudBurst offering which includes implementation services and a single support interface to make it easy.

In part II of this series we will go into more depth in exploring individual features of the appliance in more detail. Stay tuned…

Monday, July 13, 2009

Portals: The Next Generation

We’ve been building Portal applications for years…with over 230 implementations under our belt. The obvious “first implementation” done by most organizations is to create a Content Portal, otherwise known as Employee Portal, Intranet Portal, etc. We can get a typical content portal up and running in 3 weeks and can obviously do more extensive custom implementations. Today we are seeing the “next generation” trend for these portals to be adding in Dashboards and adding in Social Networking capabilities.

For Social Networking we are starting to introduce Lotus Connections and Quickr into a Portal environment to benefit from communities, blogs, wikis, etc. We’ve done this internally at Prolifics as well for our own Intranet and its really improving our ability to collaborate and share information.

For Dashboards, portals have been accessing dashboards, reports, and scorecards already, and you can also start to make those items actionable, such as drilling down for quicker problem resolution and associating reports with different applications. But many dashboard solutions require programming. If you already own Cognos for your Business Intelligence data and have reports, then you can start to make these available within a portal and can start to make these have actionable qualities as well. Generally gaining a richer overall environment. And in this case you don’t require portal programming capabilities.

When will you start exploiting your portal to capitalize on the information available to you? These little changes can make a big difference to the value of your existing portal.

Devi Gupta directs the market positioning for Prolifics and helps manage the company’s strategic alliance with IBM. Under her guidance, Prolifics has made the critical transition from a product and services company to becoming a highly reputable WebSphere service provider and winner of several awards at IBM including the Business Partner Leadership Award, Best Portal Solution, IMPACT Best SOA Solution, and Overall Technical Excellence Award. Ms. Gupta has been key to Prolifics and has fulfilled a variety of principal functions since joining Prolifics in 1991, from Product Manager to VP of Marketing. Her computer science background has allowed Ms. Gupta to move freely between the engineering and the business development/marketing sides of the technology industry, which gives her a unique ability to apply the client’s perspective to the on-going evolution of Prolifics’ technology and solutions.

Monday, June 29, 2009

Collaboration and Rational Tooling

Running effective software development projects requires a certain level of tool support. For most organizations, the tools they have come from multiple vendors, and may also include a mix of both licensed and open-source tooling. As tools tend to be acquired to meet point problems, it can be easy to lose sight of the big picture – how your team and tools fit together to deliver the business solutions needed. Integration (SOA) projects bring with them a new set of challenges which may require tooling of a type that you don’t currently have. Do you find yourself asking any of the following?

- How do I drive my team’s work in a way that allows me to track progress?

- How do I figure out what work and changes went into a build of the code created 6 months ago?

- How do I keep my distributed team aware of each other’s actions and progress?

- How do I ensure that my best practices are being adhered to?

These are questions we hear on a regular basis from our customers. Having tested and used various tooling, we have had great success with Rational Team Concert which is highly effective for team collaboration. Below are some slides I've put together which illustrate what I'm referring to...

Thursday, June 18, 2009

Think Fast! Rational AppScan using a SaaS Model

With all the buzz around SaaS these days there's a cool application of Rational AppScan for Web Application Security that may be of interest. You can now purchase Rational AppScan using a SaaS model. It basically is an outsourced version that is hosted and managed by security experts at IBM. You buy a subscription service so there is no infrastructure cost and setup time. Could be a good way to get started...

Tuesday, June 9, 2009

Dynamically Loading Java Modules

Did you ever feel there should be a way to modularize your Java modules and dynamically load and unload them as needed? In fact, move away from the whole classpath headache altogether? Well, you’re not alone. The OSGi Alliance (http://www.osgi.org/Main/HomePage) - Open Services Gateway initiative, a name that is now obsolete - is a non-profit corporation founded in March 1999 with the mission to develop a standard Java-based service platform that can be remotely managed.

In case you think OSGi is new, there are OSGi frameworks in many of the systems we use, examples include:

- Eclipse – Integrated Development Environment Plug-ins are OSGi modules

- Eclipse Equinox – Server Framework

- WebSphere Application Server v6.1

- Lotus Expeditor

- Jonas v5

- JBoss is replacing JMX with OSGi

- Spring Dynamics is an OSGi implementation

So if you have a server side development project where you have to reload classes and modules without a server restart, then take a little closer into OSGi or maybe just build a Java application for your cell phone.

Mike Hastie is an experienced solutions architect and implementation manager with a strong background in business driven and improvement focused IT solutions. He has over 20 years of IT experience covering project management, enterprise architecture, IT governance, SDLC methodologies, and design/programming in a client/server and Web-based context. Prior to joining Prolifics, Mike was a co-founder and Director of Promenix, a successful systems integrator focused on IBM software implementations. Mike also has significant large-scale systems implementation experience using SAP ERP, data warehouses, and portals during employment with Deloitte Consulting and Ernst & Young where he specialized in messaging and integration technologies using the WebSphere brand family.

Tuesday, June 2, 2009

The Curious Case of Web Services Migration - Part II

As I mentioned in the previous blog entry, the final goal was to develop a Maven-powered environment in which the deployable unit - in our case the EAR file - would be generated by a set of scripts starting from checking out versioned code from PVCS. I would lie if I tell you that I liked Maven at first sight. My initial feelings were anger and frustration as Maven-enabled RAD essentially diminished RAD to be just a fancy code editor - and nothing more. Gone were round-trip interactive development, and my productivity as a developer really suffered. Only by the end of the project I found out how to keep RAD effective and fully engaged and still be able to build using Maven. At the very end of the project I started to really appreciate the effectiveness of Maven as a build tool especially when it comes to building deployable units for different environments in a very uniform and reliable way. Its ability to manage versioned dependencies is just outstanding given the relative simplicity with which this is achieved.

Anyway, for regular Dynamic Web Projects the task would be trivial - Maven already has a plug-in to generate all deployable artifacts. But for Web projects with Web services there was nothing I could use because there were a number of generated artifacts which simply did not fit into Maven’s rigid default directory structure. I faced a task of developing a new Maven plug-in just for the tasks on hand (as long as I am mentioning this, you may guess that plug-in was successfully implemented, but read below to find out at what cost).

To assess the scope of the effort involved, let me just list the tasks accomplished.

Given: set of RAD projects fresh from PVCS each with Maven pom.xml. One specialized project had all WSDL and XSD files. Parent folder had pom.xml with our custom Maven Web services plug-in properties and configurations. Note that only scripts and libraries available with WAS 6.1 run-time are used for code generation. No part of RAD is used for Maven plug-in (in fact our customer uses AIX to run build scripts).

Below is the overview of custom plug-in functionality:

- For each WSDL in parent pom.xml run WSDL2Java script, create temporary folder structure with all Java and XML configuration files.

- For each generated webservices.xml go in and replace generated placeholder with actual servlet class name (this is because WSDL2Java script just does not do that by design).

- Combine all webservices.xml files together and create single webservices.xml to be put in the target project.

- Collect all mapping xml files and put them together in the target project.

- Collect all servlets names and classes names for each WSDL and put them as servlet/servlet-mapping entries in the Web Deployment Descriptor in the target project.

- Copy WSDL and XSD files into the target project WEB-INF/wsdl folder.

- Copy all generated Java sources and put them together with existing source code.

- Pass resulting project to the standard Maven Web project plug-in to be compiled and built.

At the end the Prolifics team had accomplished everything the client asked us to do. and we left the site with application up and running in production with no problems. The only thing they asked with amazement was: "How did you do that, guys?"

Vladimir Serebryany is a Senior Consultant at Prolifics with in-depth knowledge and broad hands-on experience with the J2EE environment as well as expertise in EJBs, Servlets, JSP and JSF. Excelling at migrations, Vladimir has over 9 years of experience with a wide range of complementary skills including WebSphere, WebSphere MQ, WebSphere Business Integration Message Broker, UNIX, C/C++, Java, HTML/ASP, JavaScript, and Visual Basic. He has served as team leader/senior developer roles in large, complex projects and configurations with clients in the financial, insurance and telecommunication industries - among others.

Monday, May 25, 2009

The Curious Case of Web Services Migration - Part I

During one of my recent BEA WebLogic to IBM WebSphere migration assignments at a major insurance company, I encountered an interesting problem.

The client had a large number of Web services which were running on BEA WebLogic and consumed by a .NET front-end. In the course of migration, our team had to not only migrate code to WebSphere but also help the customer refactor the source code repository and create a set of Maven scripts to provide a fully scripted build/deploy process for all environments - from unit test to production.

We started with the typical set of issues:

- WSDL files and corresponding XSD files had relative namespaces in them - and the spec strongly recommends absolute ones

- Source code was stored in typical WebLogic structures and deployable components were created by running four (!) different Ant scripts

- Code and WSDL files were stored in PVCS and the revision history had to be preserved

- WSDL2Java wizard was invoked every time scripts ran so binding Java code would be generated on the fly and not stored in PVCS - wise decision but binding implementation classes with client's code in them had to be preserved from being overwritten.

The first issue was easy to deal with – a couple sed/awk scripts took care of the namespaces. We gave WSDL files back to client; they validated them against their .NET environment and found no problems with new namespaces.

Item #2 was also relatively easy to solve - we manually rearranged code into a Rational Application Developer (RAD) structure and that was it. RAD is really good at that sort of thing. While doing that we also extracted PVCS archives as files from the central repository and rearranged these archives in the file system according to the new directory structure. We sent back these directories to the client’s PVCS team, and they imported these archives back into a new PVCS project. That way all the previous generations of source code have been preserved. We now were able to work with PVCS and track a file’s revision history right back to Noah's Ark.

Now we had to deal with WSDL to Java files generation. There were three sorts of files: custom code with business logic, binding code and classes generated from data types definitions in XSD files, and binding implementation classes. We did not have to worry about the first two types: the first set was permanent code which we shifted to another utility’s Java project for simplicity of maintenance. Generated Java was what it was - generated code which the wizard took care of, only the binding implementation classes was something we had to take care of. On one hand all the methods stubs are generated by the WSDL2Java wizard - on the other hand these methods already contained the customer's code. If we allowed the wizard to run "freely," this code would be overwritten.

Here we needed a trick and we found one. As it is well-known, RAD may designate one or more folders in the project to be the Source folders - meaning it will compile all Java code in these and only these folders into binary form. It happens that built-in RAD wizards are very sensitive to the order in which Source folders are listed in "Order and Export" tab of "Java Build Path" configuration page. If the WSDL2Java wizard for bottom-down Web services generation is invoked either from the pop-up menu or from the wsgen.xml script, it always places all generated files into the folder listed first in "Order and Export" tab. That "observation" provided us with opportunity to solve the problem. We would define two Source folders: “src/main/java” and “src/main/java2.” “src/main/java” would be listed first in "Order and Export" tab, “src/main/java2” would be the second. The single "target/bin" folder was designed for binary files. We placed all existing binding implementation files into “src/main/java2” folder, and we put WSDL and XSD files into a separate project. (Note that by using “src/main/java” folder structure we were shooting to use Maven.)

From that point on, if a WSDL definition changed, we would clean “src/main/java” folder and run the Web services wizard/script against new WSDL/XSD files. Newly generated binding files would go into “src/main/java” folder. As long as binding implementation files were also on the classpath, WSDL2Java wizard was smart enough to not regenerate *BindingImpl.java files, but rather pick them up from “src/main/java2” folder. IBM provided ws_gen Ant task, script and sample properties files were customized to run the whole task at any time for all 20 WSDL's we had in the service definition (see http://publib.boulder.ibm.com/infocenter/radhelp/v7r0m0/topic/com.ibm.etools.webservice.was.creation.core.doc/ref/rtdwsajava.html). We didn't include “src/main/java” folder into PVCS and never had to check-in transient generated code.

The remaining issue to solve was to reproduce in RAD the style in which WebLogic's WSDL2Java script generated the code. By tweaking WSDL2Java options on Window->Preferences->Web services->Websphere->JAX-RPC Code Generation->WSDL2Java tab in RAD, we matched the style of generated code so that RAD-generated code was almost drop-in replacement for WebLogic-generated code. The problem was solved - but only for development phase so far.

Coming in Part II: “My initial feelings were anger and frustration as Maven-enabled RAD essentially diminished RAD to be just a fancy code editor - and nothing more. Gone were round-trip interactive development, and my productivity as a developer really suffered. Only by the end of the project I found out how to keep RAD effective and fully engaged and still be able to build using Maven…”

Vladimir Serebryany is a Senior Consultant at Prolifics with in-depth knowledge and broad hands-on experience with the J2EE environment as well as expertise in EJBs, Servlets, JSP and JSF. Excelling at migrations, Vladimir has over 9 years of experience with a wide range of complementary skills including WebSphere, WebSphere MQ, WebSphere Business Integration Message Broker, UNIX, C/C++, Java, HTML/ASP, JavaScript, and Visual Basic. He has served as team leader/senior developer roles in large, complex projects and configurations with clients in the financial, insurance and telecommunication industries - among others.